Instructions

Welcome! In this project, you will read text written by an Artificial Intelligence, and mark problems you find.

This qualification HIT is to train you to mark these problems. This involves learning:

- Choosing which words to highlight (AKA "selecting spans")

- Choosing the severity of the problem

- Choosing the problem's category (this is most of the qual)

We've split this qual HIT into several parts to help you work through it. The pay is set assuming you will spend two hours on the qual, so please take your time! The actual HIT will be much quicker—you'll only be selecting problems and labeling them.

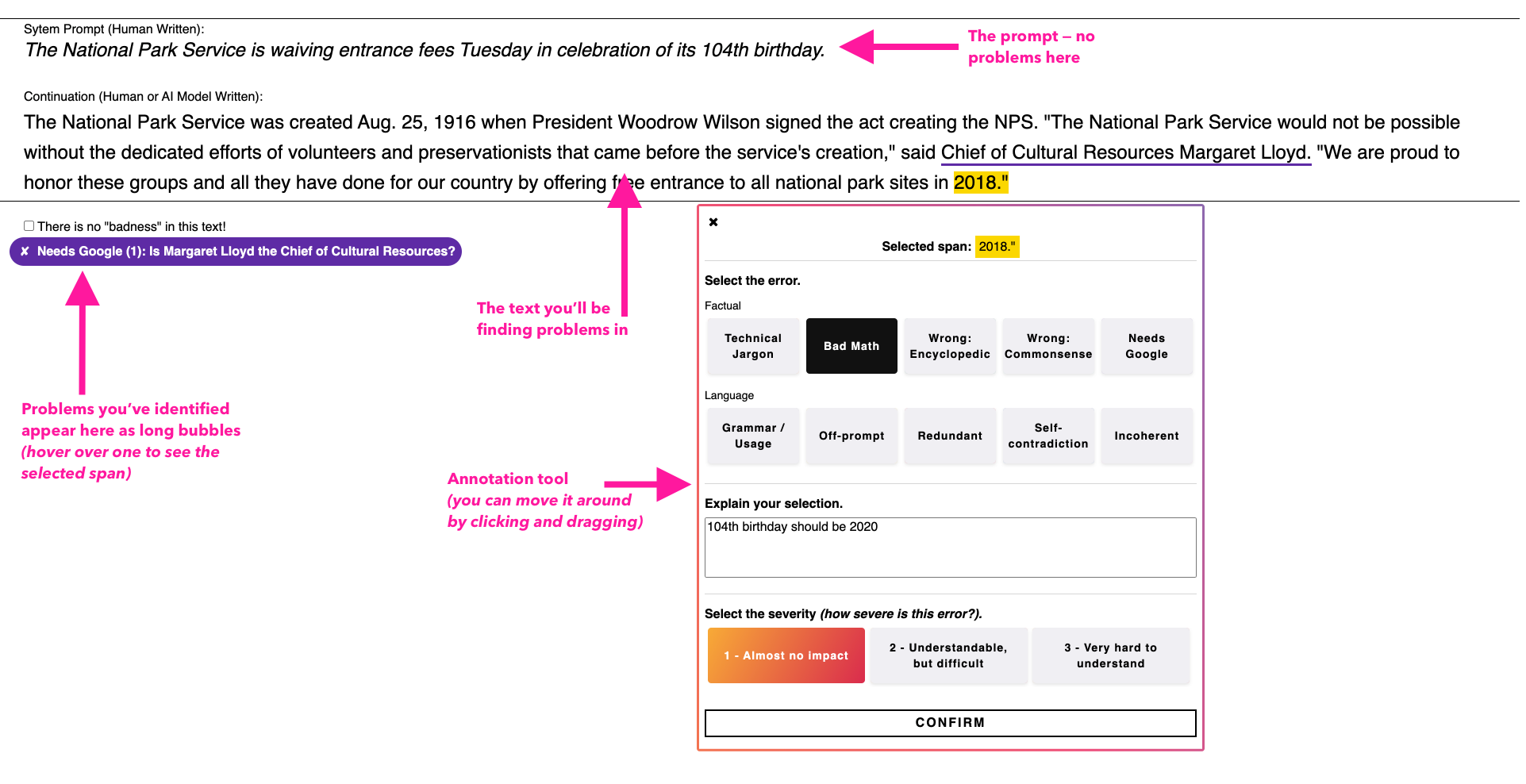

Here’s the big picture of what we’re doing:

Basic Demographics

We're collecting basic demographic information for use in our research.

What gender do you identify with?

- Male

- Female

- Other:

Which category below includes your age?

- 17 or younger

- 18-20

- 21-29

- 30-39

- 40-49

- 50-59

- 60-69

- 70 or older

Please select the race(s) which fit you best.

- White

- Black or African-American

- Native American or Alaskan Native

- Asian

- Native Hawaiian or other Pacific islander

- Spanish, Hispanic, or Latino

- Some other race (please specify)

Which option best fits the highest level of school you have completed, or the highest degree you have received?

- Less than high school degree

- High school degree or equivalent (e.g., GED)

- Some college but no degree

- Associate degree

- Bachelor degree

- Graduate degree

Do you live in the United States?

- Yes

- No

How long have you lived in the U.S.? (if not in U.S., please pick "n/a")

- Less than 1 year

- between 1 and 2 years

- between 2 and 5 years

- between 5 and 10 years

- 10 or more years

- n/a

Is English one of your native languages?

- Yes

- No

Selecting Spans

How to decide what to select?

In this task, you are asked to highlight errors in a text. The part of the text that you highlight is called a span. One challenge is deciding how much or how little text to select.

Generally, you want to select the smallest amount of text containing the error. However, if the error takes up most of a phrase or sentence that could be deleted to make the text correct, please select the whole phrase or sentence. Note that we will explain the different categories of errors below, though they are used in these examples.

Examples

An easy fix for foggy car windows is

run

the heater

- Explanation: This is a grammar error. If just the word "run" was changed to "running," then the sentence would be correct. So, we mark just this one word as the error.

Contrary to popular belief

or even popular belief,

the moon is not made out of cheese but rocks.

- Explanation: Here the error is a redundant piece of text. If we could delete the whole phrase "or even the popular belief" then the sentence would make sense. So, we select this whole phrase as the error span.

The S&P 500 closed slightly down on Thursday after heavy trading.

But on Thursday, the S&P rallied to gain 155 points, closing at 3855.

- Explanation: The second sentence contradicts the first. Since the whole sentence is contradictory, we select the whole second sentence as the error.

Severity

What is it?

Some errors are more jarring than others. We ask you to rank each error on an intuitive scale from 1 — 3.

- 1 – Almost no impact. There's a small problem.

- 2 – Understandable, but difficult. You still get what's being said, but there's definitely an issue.

- 3 – Very hard to understand. The error almost completely ruins the text.

Examples

Paul Campbell-Hughes, from the University of Aberdeen, explains how

she

managed to locate colonies of honey bees in Kent.

Grammar / Usage (severity: 1)

- Explanation: Since Paul us usually a male name, we can guess that the AI guessed the wrong gender pronoun here and should have written "he." But this error is pretty minor, so we mark severity 1.

Paul Campbell-Smith, a PhD student from the University of Kent in the UK,

claims to have discovered a clever way to explain the positive

emoticons

in cats.

Grammar / Usage (severity: 2)

- Explanation: The word should probably be "emotions" here. We can guess what was being said, so the meaning isn't totally lost. But it's definitely wrong.

Prompt:

Whether you're on Facebook, Instagram, Snapchat or TikTok, many people make huge efforts to curate the best version of themselves online.

Continuation:

This year we've got something for you: a Love Match Custom Size Poster featuring Mather, Phoenix, Kashun and all her friends, divided among six different covers, creating a beautiful custom size poster for your own personal high school reunion.

Off-prompt (severity: 3)

- Explanation: Even ignoring the end of the continuation (a poster... for your own personal high school reunion?), this whole continuation is way off the prompt. I would say it's severity 3.

Exercise

Please pick the severity for the following error:

"I'm

don't know," was the most common response among all the seventy-five users polled.

Grammar / Usage

The error severity is:

Language Error Tutorial

In this tutorial, we'll go through five different language error types: Grammar and Usage, Redundant, Off-prompt, Self-Contradiction, and Incoherent.

Grammar and Usage

What is it?

Grammar and usage mistakes are often easy to spot. This category of errors includes missing words, extra words, and incorrect or out of order words. These should be marked as Grammar and Usage errors.

Examples

A PhD student from the University of Kent in the UK, claims to have discovered a clever way to explain the positive emoticons in cats

- Explanation: The word should probably be "emotions", not "emoticons".

A couple is facing criticism for their extravagant gender reveal party. The bewitching pair had first stripped down to fishnets and backward.

- Explanation: This sentence seems to be missing a noun after the word "backward", e.g. "backward hats". Note that we select the whole phrase "and backward" because it is the smallest span that we could delete to make the sentence sensible.

Exercise

Please mark the Grammar and Usage error or errors in the following text:

After we went downtown, we said "thanks you" to the almighty coffee gods.

Redudant

What is it?

Redundant text repeats itself. Sometimes, you will see the exact word or phrase repeated. Other times, the same idea is repeated using different words. To annotate Redundant text, first select the extra repeating text and choose the "Redundant" error type. An additional section of the annotation box will pop up asking you to select the antecedents (earlier spans of text) that are being repeated.

Examples

Many merchants worry about the possibility of poor service or service for certain categories of customers.

- Explanation: This text includes the extra phrase “or service” that is repetitive. Note that when highlighting redundant information, please select the whole section that could be cut to make the text correct. After selecting the redundant text, please select the text that is being repeated:

Many merchants worry about the possibility of poor service or service for certain categories of customers.

- Explanation: In this example, the word "service" is the antecedent of the Redundant text.

They then made decisions based on Kondo’s instructions, to the extent that they created de-cluttered spaces and got rid of clutter and clutter-filled spaces.

- Explanation: Here, the antecedent phrase "created de-cluttered spaces" is repeated by the redundant phrase "and got rid of clutter and clutter-filled spaces". Note again that we select "and" as part of the redundant phrase because we could delete all this and the sentence would make sense.

The soap production center, which was used during Roman times (around 1,200 years ago), once produced large quantities of a quality soap that was exported throughout the empire. The remains of an ancient Roman factory that produced high-quality soap have been unearthed in Israel.

- Explanation: This is an example of sentence-level redundancy. The words of the second sentece are different from the first, but they repeat the same content.

Notice: If there are multiple redundant spans in text, mark them as multiple redundant errors. Here is an example: https://yao-dou.github.io/redundant_example/.

Exercise

Please mark the Redundant error or errors in the following text:

White House spokesperson Raj Shah said Japanese businesses - or Japanese companies as they call themselves in Japan - are necessary for economic growth.

Off-prompt

What is it?

In this task, every example you annotate will come with a "prompt", or a piece of text written by a human that the AI is supposed to continue. Sometimes, however, the AI will write a phrase or sentence that is completely unrelated to the prompt. Other times, the text might be related, but it contradicts the prompt. In both of these instances, you should label the span Off-prompt.

Examples

Prompt: Dogs are the new kids.

Text:

Statistics suggest that most Americans would be happier with dogs than children.

In fact, four out of five don't even visit the dentist

annually, much less every six months.

Dog owners report much higher rates of happiness than non-dog owners.

- Explanation: The prompt is about dogs, but the second sentence about dentists is unrelated to the prompt.

Prompt: China sets new record for Economic Growth

Text:

The Chinese economy

fell 10% this month, the third such loss this year.

- Explanation: The prompt claims that China's economy is growing, but the text states the opposite. Important: even though this is a contradiction, because it contradicts the prompt, we mark it as Off-prompt.

Prompt: Increased awareness of Anti-Doping regulations in Sport

Text:

The practice of doping is banned by sports federations throughout the world.

Athletes need to know which substances are banned in sport.

The use of drugs during music festivals is

widespread.

Furthermore, they must make sure that any product or medication they take does not contain a

prohibited substance.

- Explanation: Here, the prompt deals with the use of drugs in sports. The third sentence of the text does talk about drugs, so the whole sentence is not off-prompt. However, the part about music festivals is unrelated to the prompt about sports. This is why we select only the span "music festivals" as Off-prompt.

Exercise

Please mark the Off-prompt error or errors in the following text:

These are the top work-from-home counties in the US

Text:Looking for a new spot to work from home? You might want to give Georgia a shot. It's not like it’s going to be any better than the rest of them, but at least you know you aren't working under a dictator. And maybe, when this is all over, you can go back home…

Self-Contradiction

What is it?

Self-Contradiction errors occur when the AI writes something that contradicts another piece of text that the AI had previously written.

When labeling Self-Contradiction errors, you will make two selections, similar to how Redundant errors are annotated. First, select the text that is contradictory. Then, you will be prompted to select the text that is being contradicted (the antecedent).

Watch out

The Self-Contradiction label is only used when the contradiction occurs within the text. If a span contradicts the prompt, that should be labeled as an Off-prompt error instead.

Examples

McDonald's is considering a design which will replace the cardboard packaging. Mr Gore-Cotter said: "We recognise the concern around waste. We are now looking at a new design that minimises the plastic bag."

- Explanation: Here, the goal of minimizing the "plastic bag" contradicts the stated goal of replacing cardboard packaging. After selecting the contradictory text, please select the text that is being contradicted:

McDonald's is considering a design which will replace the cardboard packaging. Mr Gore-Cotter said: "We recognise the concern around waste. We are now looking at a new design that minimises the plastic bag."

- Explanation: In this example, the phrase "cardboard packaging" is the antecedent of the Self-Contradiction that occurs at "plastic bag".

Mall of America plans to lay off and furlough hundreds of its employees. It has no plans to restrict the number of hours workers can work.

- Explanation: Workers who are laid off or furloughed are explicitly restricted from working at the Mall, so this is a self-contradiction. First you should highlight "no plans to restrict the number of hours workers can work", and then when you are prompted to select the antecedent choose "lay off and furlough hundreds of its employees".

Exercise

Please mark the Self-Contradiction and its antecedent in the following text:

There's fun for kids of all ages at Six Flags over Texas in San Antonio. Located in Greensboro, NC, the theme park has been in continuous operation since 1990.

Incoherent

What is it?

Incoherent text is text that doesn't fit into the above categories, but it still just doesn’t make any sense all.

Use this label for text that is grammatical, not redundant, on prompt, not contradictory, but still confusing.

Examples

Melody Mitsugi, 28, had never given her kids cheese toast before her husband drew a map of it on her toast.

- Explanation: There are so many things wrong here: you can’t draw a map of Cheese Toast, and you wouldn’t draw it on a piece of toast if you could! However, note that the grammar is correct and there are no redundancies. This is why it gets the Incoherent label.

Cats naturally show anxiety and fear by at times breaking apart different parts of the brain in an attempt to keep the others from escaping.

- Explanation: It's hard to imagine what is happening in this passage, but the text is grammatical and non-contradictory and so we use the Incoherent label.

Exercise

Please mark the Incoherent portion of this text:

China’s top official acknowledged the state-controlled Chinese firm CCI’s finding that some of its own devices could infect patients or others with certain dangerous infections and civil liberties, ending more than a decade of fervent denials.

Language Error Label Quiz

Now, we'll have a short quiz to review the five "language error" label types you just learned. (Hint: each one will be used once!)

First you will need to verify the identity of the person who is calling, and then determine who they are.

Please choose the error type (the labels are clickable):

It was not long before Phil became angry. "Holy moly!", he said, "I have never been so happy to see anyone in my life!"

Please choose the error type (the labels are clickable):

Icelandic, according to one expert, is the second most hard language for English speakers to learn.

Please choose the error type (the labels are clickable):

Despite finishing at the top of her class at Yale, Betsy nevertheless filtered into a glass jar during most of her formative years.

Please choose the error type (the labels are clickable):

Prompt: Summer Salad Recipes

Text:

It's August, and that means it's time for salads.

And if that's not enough, you can go to the bank for a quick loan offer.

Though enjoying salad with friends and family is an even better way to spend the days.

Please choose the error type (the labels are clickable):

Reader and Factual Error Tutorial

In this tutorial, we'll go through two different reader error types: Technical Jargon and Needs Google, and three different factual error types: Bad Math, Wrong: Commonsense, and Wrong: Encyclopedic.

Technical Jargon

What is it?

It can be hard to understand writing simply because it uses jargon or specific words from a field you’re not familiar with. When this happens please mark it as Technical Jargon.

Examples

Due to the large size of the heavy s-block elements, including strontium, a vast range of coordination numbers is known, from 2, 3, or 4 all the way to 22 or 24 in SrCd11 and SrZn13.

- Explanation: Basically every part of this sentence is confusing to me because I don't understand the words. (I think I would need to know a lot more about chemistry).

In Chile, an 800-megawatt photovoltaic plant was built for a record low cost of $129 per megawatt-hour last year.

- Explanation: This word is too technical for me to know what it means without looking it up. (Though if it was explained in more detail in the text, then I wouldn't mark it.)

He uses a spirit mash made from white corn and malted barley and a neutral grain, which he describes as a "whiskey grain.”

- Explanation: I don’t know exactly what these terms mean without looking them up. (I think I would need to know more about making spirits.)

A wedding gown draped over one shoulder is not a "corset," it's a "camisole," according to fashion maven Diane von Furstenberg.

- Explanation: It’s sort of embarrassing, but I don’t really know what a corset or a camisole are. If you did, and you knew that this was wrong, then you would mark that whole phrase as Wrong: Commonsense. But for me, I just have to mark the individual words as Technical Jargon.

Exercise

Please mark the Technical Jargon error or errors in the following text:

The burning sensation was described in both the olecranon and the cubital fossa, according to those familiar with the patient.

Needs Google

What is it?

When there’s a fact or figure that you suspect might be true, but you would need to Google it to be sure, don’t google it! Instead, mark the Needs Google error type.

Here are the kinds of things you'll typically use the Needs Google tag for:

- Specific people and their roles (e.g., does X have Y job?)

- Specific events (e.g., did X win award Y on year Z?)

- Specific dates (e.g., did X happen in year Z?)

- Specific numbers (e.g., did X actually cost Y?)

Examples

It was promoted by Dr. Michael Fanning, the Executive Director of the Foundation for Mental Health Awareness, Inc.

- Explanation: Do you know off the top of your head whether Dr. Michael Fanning is actually the Executive Director of the Foundation for Mental Health Awareness, Inc? I don’t, so I would mark this Needs Google

Paul Farmer, who was Chief Executive of the International Fund for Agricultural Development (IFAD) in 2010 when it won the Nobel Peace Prize

-

Explanation:

There’s actually two things I would need to Google here:

- Was Paul Farmer the Chief Executive of the International Fund for Agricultural Development (IFAD) in 2010?

- Did the International Fund for Agricultural Development (IFAD) win the Nobel Peace Prize in 2010?

an 800-megawatt photovoltaic plant was built for a record low cost of $129 per megawatt-hour last year.

-

Explanation:

Again, I would mark two

Needs Google

errors here (along with a

Technical Jargon

error for the word “photovoltaic”).

Here are the two

Needs Google

errors I would use:

- Can a photovoltaic plant be roughly 800-megawatt?

- Is $129 per megawatt-hour an actual reasonable cost for a power plant?

Watch out

You don't need to rigorously fact-check every single sentence. Instead, just mark Needs Google for text that makes a specific claim that you're not sure is true.

Exercise

Please mark the Needs Google error or errors in the following text:

Tomorrow, on July 9, Argentines will celebrate the country's over 100 years of independence from Spain in 1916.

Bad Math

What is it?

Sometimes the text will simply have Bad Math. This includes:

- problems with basic math (+ - ✖️ ÷) of known quantities (e.g., "half of 10 apples is 4," or "half of $5 is $3")

- problems converting fixed units (m, in, cm, kg, lbs, oz, $ → ¢, etc.)

-

problems converting currencies that are wildly impossible (e.g., 1$ = 10£)

- for reference 1 US Dollar ($) has varied between roughly 1.2 and 2.2 Great British Pounds (£) over the last 30 years.

Examples

One account, @Iain_Rowling1, had over 500,000 followers at one point, but in just four days they fell by around half – some 4,000.

- Explanation: Half of 500,000 is not 4,000

Her doctor said that while her weight of 125 lbs (45 kg) was normal, the bizarre creatures...

- Explanation: 125 lbs = 56.7 kg

... compared with just over £1,000 ($18,868) for previous versions of Samsung’s flagship phone.

- Explanation: This conversion assumes that at some point when Samsung was making phones, £1.00 = $18.9, which is way outside the expected range of $1 – $2.5 per £.

Watch Out

Not all problems with numbers are Bad Math! When you encounter unbelievable numbers, this is usually a Wrong: Commonsense problem. Prefer to use the Wrong: Commonsense error when you think a number is unreasonable.

Here are some examples of numerical errors that fall into other categories:

... compared with just over £10,000 ($18,868) for previous versions of Samsung’s flagship phone.

- Explanation: The problem here isn't the currency conversion, which claims 1£ = $1.89 (which is believable for recent history). The problem is that this claims a Samsung phone costs £10,000 ($18,868), which is way too high for a phone. Since this isn't a specific phone, we instead rely on our commonsense knowledge of how much phones cost, and mark Wrong: Commonsense.

... compared with just over £1,000 ($1,868) for previous versions of Samsung’s flagship phone.

- Explanation: There's no error here! The price for a phone (£1,000) is believable, and the currency conversion (1£ = $1.89) is also believable.

The picture is from high above the South Pole, where close to 100,000 Astronauts live and work.

- Explanation: It's unbelievable that there are 100,000 astronauts. This is Wrong: Commonsense. This isn't a result of bad math (there's no math being done). The problem is that the number seems unreasonable.

Exercise

Please mark the Bad Math error or errors in the following text:

The doctors said about half of the patients (33%) fell ill, while the remainder continued to be in good health for at least two weeks (14 days).

Wrong: Commonsense

What is it?

The AI sometimes writes text that violates our everyday basic understanding of the world. We mark these kinds of errors with Wrong: Commonsense. Commonsense errors come in many forms. Let’s look at some examples!

Examples

The picture is from high above the South Pole, where close to 100,000 Astronauts live and work.

- Explanation: There's no way that there are 100,000 astronauts currently in space! Even if we don't know the exact number, we can use our common sense to know that's way too high.

The thinness of women's bodies isn't an answer to all common human health problems like obesity or diabetes

- Explanation: While “thinness” may not be an answer, it’s impossible to be "thin" and "obese" at the same time. The AI confuses this commonsense fact.

Every person who holds a high school- or college-level diploma is unhappy.

- Explanation: This doesn't make sense. We know that a diploma doesn't make it impossible for someone to be happy.

You can get the dress custom-made and stitched at your favorite spa.

- Explanation: Spas don't offer stitching.

Now in 2020, NASA is measuring California wildfire temperatures using an instrument on the International Space Station. This year's record-shattering heat has had global repercussions in 2017, forcing sea level rise on California and increasing the risk of deadly wildfires.

- Explanation: It doesn't make sense that something in 2020 could affect something in 2017. That's backwards in time!

Exercise

Please mark the Wrong: Commonsense error or errors in the following text:

In addition, obese women who obtain abortions are significantly more likely to die from childbirth than women who don't end up terminating their pregnancies, the researchers found.

Wrong: Encyclopedic

What is it?

The AI sometimes writes things that are just plain factually wrong. We use Wrong: Encyclopedic to mark errors where the correct information is written down in a fact table somewhere, like a textbook, a wikipedia sidebar, or an encyclopedia.

Examples

Japanese Prime Minister Justin Trudeau said he will be halting all imports and exports until the current situation can be contained.

- Explanation: Justin Trudeau is the Prime Minister of Canada, not Japan. This is a clear fact that's written down in numerous places.

The gas contains something known as phyto-romatic acid, a common chemical element in the periodic table .

- Explanation: Phyto-romatic acid is not an element on the periodic table. (If you didn't know this, but you suspected it might be wrong, you would use Needs Google instead.)

Watch out

For Wrong: Encyclopedic, we want to mark errors that we know are factually wrong. These are things we could look up in Wikipedia, but we don’t have to, because we already know it.

Here is how to distinguish this from other kinds of errors:

- Pick Wrong: Commonsense if you know something is wrong, but it would probably be awkward to find it written down in Wikipedia. For example, knowing that a toaster is smaller than a person, or that doctors usually help patients, would both fall under commonsense knowledge.

- Pick Needs Google if you suspect something might be wrong, but you would need to check online to be sure. (Remember, no need to actually Google anything!)

Exercise

Please mark the Wrong: Encyclopedic error or errors in the following text:

In Japan, where Apple does most of its manufacturing, conditions for workers are a frequent focus for civil rights activists.

Facts Label Quiz

Now, we'll have a short quiz to review the five "factual error" label types. (Hint: each one will be used once!)

The committee unanimously voted to build a statue of Jesse Owens, four-time gold medalist in the 1936 Olympic Games.

Please choose the error type (the labels are clickable):

This one weird trick may be added to standard heath advice, such as aiming for eight hours of sleep and drinking six to eight cocktails per day.

Please choose the error type (the labels are clickable):

This simple policy is responsible for the rapid population growth of the United States, which, as of 2020, is the most populated country in the world.

Please choose the error type (the labels are clickable):

Surprisingly, this policy lasted nearly 100 years, from 1941 through 1975.

Please choose the error type (the labels are clickable):

The most important factor, revealed by principal component analysis (PCA), was the presence or absence of close childhood friends.

Please choose the error type (the labels are clickable):

Actual Task

Now it's time for you to apply what you learn in an actual task that you will do soon in the future. This text continuation is truncated from a longer text continuation. Please annotate any kind of "badness" in the text continuation, and if you think this text continuation doesn't have any "badness", please check the box below. (Hint: There are about 8 errors in this text continuation.)

Sytem Prompt (Human Written):

If you spied a fiery red star close to the full moon over the weekend, it was actually Mars shining its bright red light in the night sky.

Continuation (Human or AI Model Written):

The red-shaded, fire-sickened star was seen shining low in the southern sky at about the same time as the full moon was dipping below the horizon at around 3 a.m. on Oct. 22. The fiery red star was seen at about the same time the Mars moon Phobos was closest to the sun. Credit: Skylab.org Phobos, the smallest and brightest of the three moons of Mars, is the smallest planet's satellite. Mars has four, larger moons, all of which orbit around the planet at various distances and in varying degrees of alignment with it.

Optional Feedback

If you have any feedback about improving this task, you can let us know here! Completely optional / not required.